The era of the perfectly safe connected device is over. Perfect safety is, and perhaps always has been, a fiction. The discomforting reality is that pacemakers can be hacked. Patch pumps are vulnerable. And hospital information ecosystems, for the person looking to cause mayhem, are woefully undefended. Wireless connectivity in medical devices is to some less a great data-enabled advance than a weakness to be exploited. It’s frightening stuff, and as WIRED pointed out, we’re not prepared: “You'd think by now medical device companies would have learned something about security reform. Experts warn they haven’t.” And it’s not just medical devices: even toys—connected toys!—are susceptible to hacking.

As designers of the connected future, we can’t afford to pretend that we live in a more innocent age. Problem is, designing for safety, connected or otherwise, requires a vigorous flip in thinking, and such acrobatics could well interfere with the innovation process. At Continuum, we’ve always looked at innovation holistically, which means working to achieve a systemic understanding of any IoT-enabled product. But we’re also aware that certain capabilities are beyond our ken—which is why networked innovation is critical—and one of these is security. We understand that security is a connected value, but it’s not our area of expertise. We don’t typically focus on the risks our innovations provide, but instead zero in on making them as human-centered as possible.

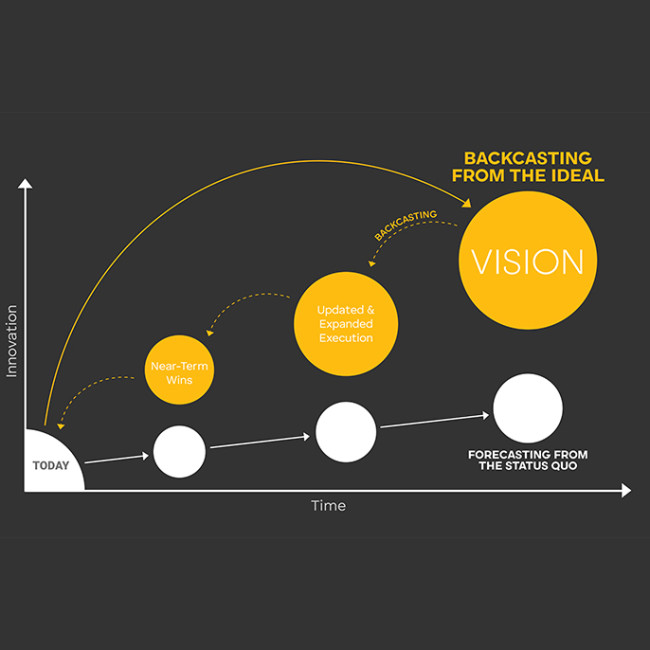

Backcasting is one of the ways we innovate. We identify a longer-term ideal future state, work with clients to build near-term wins, and ultimately design and implement the strategy to scale that work. Our thinking is existentialist, because it envisions the future and then creates it. Our attitude is optimistic, because we know that open-mindedness is necessary for seeing new possibilities and creating them. But not everyone focuses on the ideal future. There are bad actors out there who actively seek to create worst-case scenarios. Catastrophe is a blind spot for innovators. We’re so invested in creating a path to our ideal future state, that we don’t look at how things can go terribly wrong. (Charlie Brooker and his Black Mirror vision of the future wouldn’t fit in at Continuum.) To our mindset, it seems almost inconceivable that some people and organizations consciously seek to turn present-day reality into a dystopia. And it doesn’t even require malice for things to come out drastically worse than we intended. Perverse incentives and negative feedback loops can lead systems to many kinds of failure, such as the tragedy of the commons.

As the many stories about the dangers of medical hacking make clear, someone should be actively imaging worst-case scenarios, and the ways to prevent them. And sooner rather than later. It’s wonderful to think of the promise of, say, virtual reality, but you’d better also consider the unsettling idea of trolls infesting that digital space. To not do so is irresponsible. The smart thing is for us all to find partners who specialize in envisioning the worst happening. Disaster planning could occur as a parallel activity to innovation.

Worst-Case Scenario Planning

An intelligent way to view security threats is to start from the worst-case scenario and work forwards. Imagine the least ideal state, and then create staged barriers to prevent us from ever reaching it. Moving from the most intolerable situation, to more tolerable ones, to the current state, will give the security folks a perspective that could well lead to innovative solutions for thwarting bad actions. Think, too, about situational context. A device with Bluetooth may need different security measures if it’s used in a public space, on a local network, or in the privacy of someone’s home. It would also need different measures if it’s accessible by the internet through another paired device or not.

One approach we take at Continuum is to include stress-tests in our prototyping. We imagine the contingency scenarios that factor in “What if?” events outside our control. We build these into the experience we are testing in order to understand the impact on how people perceive the product or service.

This kind of thinking helps develop the mindset needed to create newer, better guardrails. It’s not hard to imagine plotting this more completely alongside our standard backcasting model. Or even creating some kind of safety dashboard that a client could use as an idea gets implemented.

The exercise can also help an organization to rethink the current vulnerabilities and even make a device safer than it currently is. It’s about doing the excellent fieldwork, to get the most in depth understanding of reality, and then using that information to improve. The trick is to create a realistic worst-case scenario. Imagination and judgment combine to assess and address risk. The goal is not merely to imagine the most outlandish and catastrophic outcome possible. Probability must factor in.

Engineering Some Good Questions

Continuum has strong mechanical and electrical engineering teams. The thing to note about these people: they don’t just provide technological know-how; they ask a lot of questions, detailed ones, that drag some of our more fanciful ideas down to earth. And in doing so, they help make our innovations safer than they might otherwise be. Given a chance, they'll pose all kinds of smart queries as we develop devices, including the following:

Who should have access to your prototype? Will users need to create an account? Who will be able to make changes to the prototype? Are there privacy or security provisions that must be in place for new information you collect? Is there an established way to access actual customer data in an unfinished product, while maintaining your organization’s standards? Are your development and legal teams equipped to make decisions on where strict processes and oversight is necessary, and where it can be significantly relaxed?

So, while engineers aren’t privacy experts per se—our folks are software engineers, networking engineers, human factors engineers, and more—their evidenced-based frame of mind and clear inquisitive sense of pragmatism can help ensure that things will be more safely designed. Bring them into your safety-evaluation process—and don’t let them leave.

Hackers are People, Too. Talk with Them. Regularly.

One of the reasons we excel at prototyping is that we continually take our stuff and present it, in the proper context, directly to the people who will be using it. We excel at designing the tests around our prototypes, in order to elicit the information needed to move forward. This process could very easily be transferred to the idea of security testing.

What if, at various stages, we presented our IoT devices to white hat hackers (these are, for the uninitiated, hackers who test and evaluate security systems for system owners) for their feedback? That would surely be a more effective way of testing for security than merely relying on academic opinion or computer models. We could easily imagine working with IoT security experts to design, arrange for, and learn from security testing. Treating hackers as end users could prove extremely beneficial to everyone involved. One of the reasons medical devices are so unprotected is because the companies that create these innovations don't have access to the hacker mindset. We don’t either, but we know how get into the heads of people who use goods and services, and could transfer this skill into the realm of security.

Make It Safe as You Make It Real

The future needs people who can imagine the worst, to complement those whose job it is to imagine the best. It’s not a fanciful idea—but one that we need to act on, if we’re going to make the future both safe and real. Let’s do it!